Open Source Database Schemas

While the foundational protocols for the internet like TCP/IP and UDP were developed in the mid to late 1970s, it wasn’t until the early 1990s when HTTP and the modern web browser took shape that the technologies began to coaelsce into something the world was able to adopt en masse.

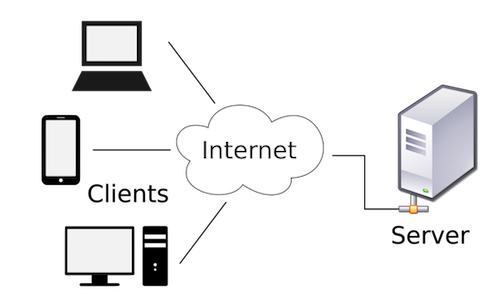

The “world wide web” offered an easy and inexpensive way to distribute and access information at levels that were never before possible. Fundamentally, the system is made up of “Servers” that make information and applications available for “Clients” to consume and interact.

But while traditional protocols like HTTP have offered access for everyone in the world to find information and interact with applications, ownership of the data is still heavily centralized with a limited number of organizations, as network effects and economies of scale encourage power law distributions where a select few massive winners comprise most of the market. This is demonstrated in Google’s ability to serve as the world’s best demand fulfillment engine, Amazon’s ability to sell you anything, and Facebook’s ability to connect anyone. As they extend their lead in servicing your demands, they attract more users and information and are able to continuously reduce their unit costs.

Because we expect any organization to act in its own self interest, we would thus expect that this massive centralization of data will lead Facebook, Apple, Amazon, etc… to create moats to ensure they remain the sole provider of this data, which only serves to reinforce their dominance. We’ve already seen this play out, as virtually all of the largest companies in the world are now software companies (this trend will continue):

Largest US Companies by Market Cap (2018)

| Rank | First Quarter | Second Quarter |

|---|---|---|

| 1 | Apple $851M | Apple $909M |

| 2 | Alphabet 717M | Amazon 824M |

| 3 | Microsoft 702M | Alphabet 774M |

| 4 | Amazon 700M | Microsoft 757M |

The Reality of Centralized Data

As Peter Thiel likes to say, competition is for losers. While this is certainly true from the lens of the monopolist, how does this perspective affect the rest of us?

Unsurprisingly, it doesn’t work out very well for us. One recent example demonstrating the possible negative externalities of centrally controlled data is the 87 million Facebook users who unwittingly provided personal information that was allegedly used to gerrymander the most recent presidential election.

Another glaring example is the recent interaction between Epic EHR (and billionaire) CEO Judy Faulkner and former Vice President Joe Biden discussing Biden’s Cancer Moonshot initiative. When Biden asked how he might export his full medical record from a platform like Epic, Faulker responded:

Why would you want your medical records? They’re a thousand pages, of which you'd understand 10...

To which Biden responded:

None of your business! And if I need to, I’ll find someone to explain them to me and, by the way, I will understand a lot more than you think I do.

These aren’t the only issues surrounding the centralized data structures of the internet as it exists today. The network layer of the internet is also centralized, which means governments or other bad actors can intervene and disable internet access for entire nations by severing one or a select few network hubs. This played out during the Arab Spring where governments censored free speech and suppressed anti-incumbency by disabling access to a number of social media applications like Twitter & Facebook.

These examples are representative of the hazards related to monopolies and centralized data, power, and control. How can we be sure that government agencies, corporate CEOs and board members have our best interests in mind when it comes to our personal data and access to digital services?

Crypto & Data Sovereignty

New cryptographic protocols and their corresponding token economies have the potential to provide the necessary framework and incentive structures for individuals to take control over their data.

There are protocols under development today like Protocol Labs’ Interplanetary File System (IPFS) and InterPlanetary Linked Data (IPLD) that will enable the transition from a centralized web to a distributed network of information and services – where data exists independently of centrally controlled web servers and is not accessed through one single address but through a distributed network of nodes that store and serve information.

What’s more, we can easily encrypt and authenticate data by signing it using digital signatures. This method is superior to the security model of the web where technologies like TLS only encrypt the connection between peers, but do not encrypt or authenticate the data itself.

IPFS & IPLD also allow us to store and access information on the distributed web via massive directed object graphs using merkle links. This gives us similar functionality to foreign keys within relational database systems and model associations in ORMs like Ruby on Rails, where we can retrieve entire graphs of object dependencies.

Shared Database vs. Shared Database Schema

A database is where we store and access organized digital information.

A database schema details the types of data that can exist within a database and how this data is related. In relational database systems, data is typically organized into “tables” comprised of columns and rows. Generally, a table represents a distinct resource, like a new user or a product, where the rows within the table represent instances of that resource, e.g. my twitter account has a row in Twitter’s “User” table and has additional information in columns such as my twitter handle, my full name, email address, bio, etc…

As I delve deeper into the concepts behind the distributed web and distributed databases, where I get stuck is how decentralized applications will read and write information to this distributed database that is portable to any decentralized application. What use is having all this available data if there isn’t a consistent API or shared properties and datatypes for common resources? I’m not sure exactly what to call this, but it’s effectively an open source, shared database schema.

While IPLD does offer some insights into data formatting and establishes conventions by requiring other serialization formats to easily transcode into JSON, the question remains as to how we will define commonly used resources and their properties (like users.name, products.price, locations.country, etc…).

Since JSON seems like the most appropriate option for data storage and exchange given its popularity, flexibility and simplicity, it would therefore follow that we could embed some type of ontological resource validatation within JSON objects to declare to which standard resources want to comply.

The W3C, a longtime advocate of the semantic web, recently developed the JSON Linked Data (JSON-LD) spec, which aims to accomplish the following:

Linked Data [LINKED-DATA] is a way to create a network of standards-based machine interpretable data across different documents and Web sites. It allows an application to start at one piece of Linked Data, and follow embedded links to other pieces of Linked Data that are hosted on different sites across the Web

In addition to all the features JSON provides, JSON-LD introduces:

- a universal identifier mechanism for JSON objects via the use of [IRIs](https://www.w3.org/TR/rdf11-concepts/#dfn-iri)

- a way to disambiguate keys shared among different JSON documents by mapping them to IRIs via a context

- a mechanism in which a value in a JSON object may refer to a JSON object on a different site on the Web

- a way to associate datatypes with values such as dates and times

The item that catches my eye is the “@context” property, which essentially maps terms (any non reserved JSON-LD keyword) to IRI’s (Internationalized Resource Identifiers).

Take this example, where this JSON-LD document contains properties consistent with a person, which you can then validate against the Schema.org vocabulary:

{

"@context": "https://json-ld.org/contexts/person.jsonld",

"name": "AJ Solimine",

"homepage": "https://hitting406.com/",

"image": "https://hitting406.com/images/ajsolimine-canonical.jpg"

}

The use of contexts to establish a standard data schema & vocabulary is compelling. If we apply this concept to the distributed web, this would allow us to create a new object on our shared distributed database, and attach a reference property to validate my object against a shared schema (when writing to the db) or to transcode it to a spec that I’m expecting as an application developer if I’m querying objects I didn’t create.

While the JSON-LD implementation is a step forward towards interoperability and the concept of having an authoratative schema is directionally correct, there are 2 issues why I don’t think it has gained significant adoption like a similar concept would in a distributed web:

- The lack of a built-in authentication model thus discouraging adoption around sensitive information (relies on APIs for authentication)

- The use of a centralized schema definition

While the first issue around a flawed security model is substantial, I think the larger issue is that JSON-LD uses Schema.org to validate resources, and that Schema.org, while collaborative, is a consortium of mostly for-profit companies that clearly have their own incentives that aren’t necessarily aligned with public good.

An better alternative might be to store the schema on the distributed web itself, and to allow multiple schemas to compete with one another (where objects can just refer to a canonical schema address). While we unfortunately still likely would rely on a few authors & maintainers of this schema (again requiring some form of centralized power since schemas would be ubiquitous), allowing developers to select their schema might help mitigate this effect.

Dapp-centric JSON Schema Validation

Another interesting possibility is the use of the recently proposed IETF JSON Schema Standard to describe and validate common JSON metadata. An example Schema is comprised of a few properties:

$schemastates that this schema is written according to the a specific draft of the standard and used for a variety of reasons, primarily version control.$iddefines a URI for the schema, and the base URI that other URI references within the schema are resolved against.titleanddescriptionare annotation keywords and are descriptive only. They do not add constraints to the data being validated. The intent of the schema is stated with these two keywords.typeis a validation keyword defines the first constraint on our JSON data and in this case it has to be a JSON Object.

Here’s a reasonably complex example of the JSON Schema standard in action. You’ll see that we can do things like embed other objects with their own schema validation references using the $ref attribute.

{

"$schema": "http://json-schema.org/draft-07/schema#",

"$id": "http://example.com/product.schema.json",

"title": "Product",

"description": "A product from Acme's catalog",

"type": "object",

"properties": {

"productId": {

"description": "The unique identifier for a product",

"type": "integer"

},

"productName": {

"description": "Name of the product",

"type": "string"

},

"price": {

"description": "The price of the product",

"type": "number",

"exclusiveMinimum": 0

},

"tags": {

"description": "Tags for the product",

"type": "array",

"items": {

"type": "string"

},

"minItems": 1,

"uniqueItems": true

},

"dimensions": {

"type": "object",

"properties": {

"length": {

"type": "number"

},

"width": {

"type": "number"

},

"height": {

"type": "number"

}

},

"required": [ "length", "width", "height" ]

},

"warehouseLocation": {

"description": "Coordinates of the warehouse where the product is located.",

"$ref": "https://example.com/geographical-location.schema.json"

}

},

"required": [ "productId", "productName", "price" ]

}

We can also do things like validate the data type using $type, check that a property is required, and perform more specific validations on numbers.

This is interesting, as a decentralized application could use JSON schemas hosted in IPFS to ensure that any new JSON object conforms to all validation rules of the applicable JSON schema. In this scenario, the dapp developers would most likely be the ones creating the schemas, so the authoritative schema design for a particular object would most likely be dictated by the more popular dapp developers. I’m not sure I’m totally in love with that, but it’s probably the most likely case as every individual dapp developer has an incentive to describe objects that are the most relevant to their dapp. This is similar, but perhaps much more agile than the development of microformat standards.

Machine Learning & AI

While writing all of this has been a useful thought exercise, I can’t help but hear the echoes of all of the critics of the semantic web, arguing that it’s a hopeless exercise to assume some collaborative human effort could define something so complex, and that attempting to manually construct and maintain the semantic web is a foolish endeavor.

Google has almost single-handedly proven that probabilistic modeling is more effective than manual models when it comes to mapping disparate resources. Human coordination is too time consuming and costly, so machines are much better suited to make inferences on data associations on our behalf.

So maybe computers should write and publish the schema we should use via probabalistic modeling by analyzing what everyone is publishing as IPFS documents, in essence creating and maintaining an evolving dictionary that both humans and application developers can understand.

Call it – an observational schema – one that is continuously updated as the overall system adapts to use. If a specific object type begins to contain an additional property with accelerating consistency, then the schema can adapt further.

Whatever solution emerges, I do believe the distributed web will need some sort of shared database schema. In practice, I think it will be some combination of machine learning and individually mantiained JSON Schemas hosted as their own JSON documents on IPFS that succeed.

In any case, I’m excited to revisit this post in 5-10 years to see how things unfold!